A short note on multiple imputation

Background

Missing data is quite challenging to deal with. Deleting it may be the easiest solution, but may not be the best solution. Missing data can be categorised into 3 types (Rubin, 1976):

MCAR

- Missing Completely At Random

- Example; some of the observations are missing due to lost of records during the flood

MAR

- Missing At Random

- Example; variable income are missing as some participant refuse to give their salary information which they deems as very personal information

MNAR

- Missing Not At Random

- Example; weight variable is missing for morbidly obese participants since the scale is unable to weight them

Out of the 3 types above, the most problematic is MNAR, though there exist methods to deal with this type. For example, the miceMNAR package in R.

There are several approaches in handling missing data:

Listwise-deletion

- Best approach if the amount of missingness is very small

Simple imputation

- Using mean/median/mode imputation

- This approach is not advisable as it leads to bias due to reduce variance, though the mean is not affected

Single imputation

- Simple imputation above is considered as single imputation as well

- This approach ignores uncertainty of the imputation and almost always underestimate the variance

Multiple imputation

- A bit advanced and it cover the limitation of single imputation approach

However, the main assumption for any imputation methods is the missingness should be MCAR or MAR.

Multiple imputation

In short, there are 2 approaches of multiple imputation implemented by packages in R:

Joint modeling (JM) or joint multivariate normal distribution multiple imputation

- The main assumption for this method is that the observed data follows a multivariate normal distribution

- A violation of this assumption produces incorrect values, though a slight violation is still okay

- Some packages that implemented this method:

Ameliaandnorm

Fully conditional specification (FCS) or conditional multiple imputation

- Also known as multivariate imputation by chained equation (MICE)

- This approach is a bit flexible as distribution is assumed for each variable rather than the whole dataset

- Some package that implemented this method:

miceandmi

Example

In mice package, the general steps are:

mice()- impute the NAswith()- run the analysis (lm, glm, etc)pool()- pool the results

Figure 1: Main steps in mice package.

These are the required packages.

library(tidyverse)

library(mice)

library(VIM)

#library(missForest) we want to use prodNA() function from this package

library(naniar)

library(niceFunction) #install from github (https://github.com/tengku-hanis/niceFunction)

library(dplyr)

library(gtsummary)We going to produce some NAs randomly.

set.seed(123)

dat <- iris %>%

select(-Sepal.Length)%>%

missForest::prodNA(0.2) %>% # randomly insert 20% NAs

mutate(Sepal.Length = iris$Sepal.Length)Explore the NAs and the data.

naniar::miss_var_summary(dat)## # A tibble: 5 x 3

## variable n_miss pct_miss

## <chr> <int> <dbl>

## 1 Petal.Length 38 25.3

## 2 Sepal.Width 33 22

## 3 Species 28 18.7

## 4 Petal.Width 21 14

## 5 Sepal.Length 0 0Some references recommend to remove variables with more than 50% NAs. However, we purposely introduce 20% NAs into our data.

As a guideline, we can check for MCAR for our NAs.

naniar::mcar_test(dat) #p > 0.05, MCAR is indicated## # A tibble: 1 x 4

## statistic df p.value missing.patterns

## <dbl> <dbl> <dbl> <int>

## 1 38.8 40 0.522 14Next step is to evaluate the pattern of missingness in our data.

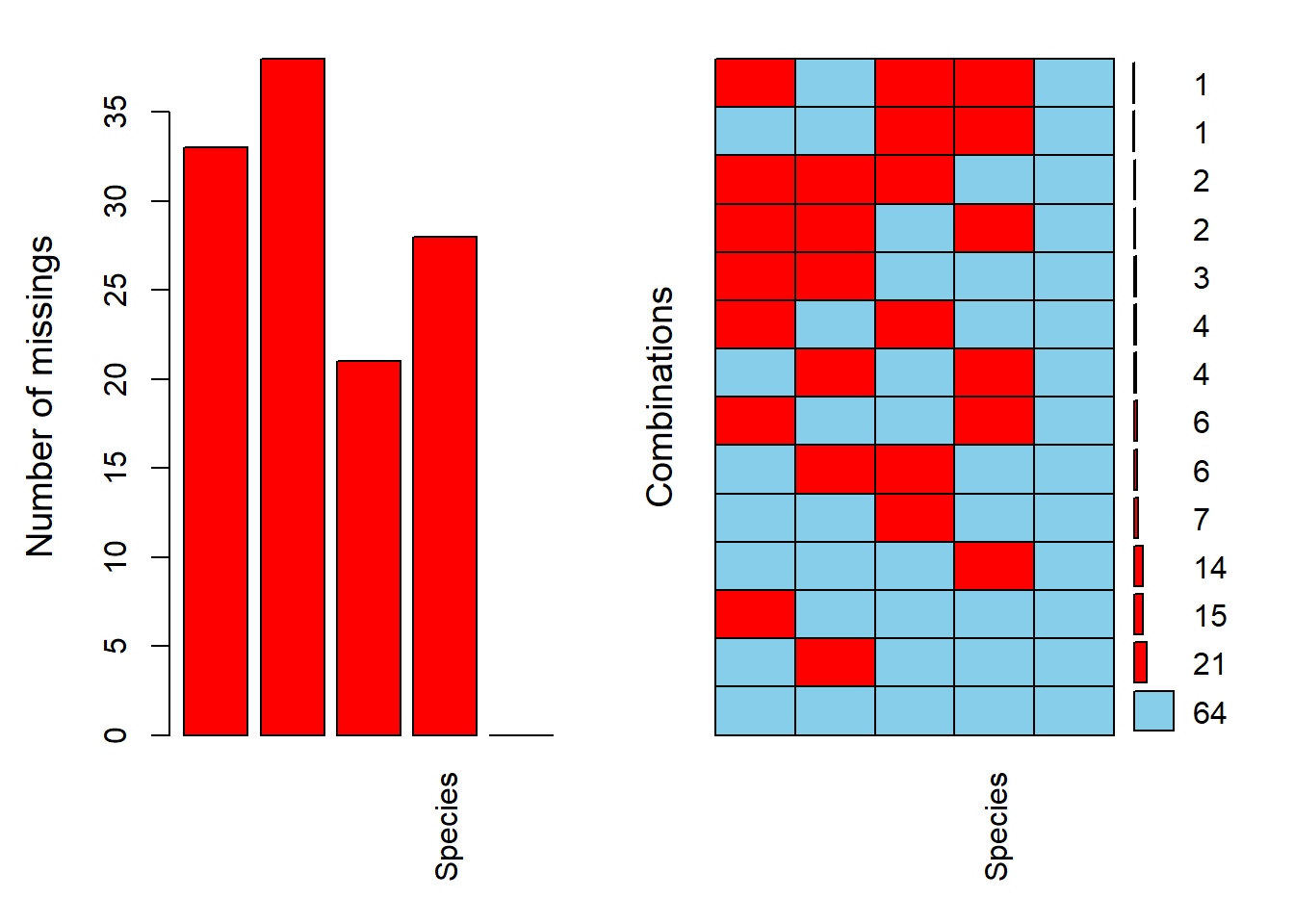

md.pattern(dat, rotate.names = T, plot = T)

## Sepal.Length Petal.Width Species Sepal.Width Petal.Length

## 64 1 1 1 1 1 0

## 21 1 1 1 1 0 1

## 15 1 1 1 0 1 1

## 3 1 1 1 0 0 2

## 14 1 1 0 1 1 1

## 4 1 1 0 1 0 2

## 6 1 1 0 0 1 2

## 2 1 1 0 0 0 3

## 7 1 0 1 1 1 1

## 6 1 0 1 1 0 2

## 4 1 0 1 0 1 2

## 2 1 0 1 0 0 3

## 1 1 0 0 1 1 2

## 1 1 0 0 0 1 3

## 0 21 28 33 38 120aggr(dat, prop = F, numbers = T)

We have 13 patterns (numbers on the right) of NAs in our data. These 2 functions work well with small dataset, but with a larger dataset (and with lot more pattern of NAs), it’s probably quite difficult to assess the pattern.

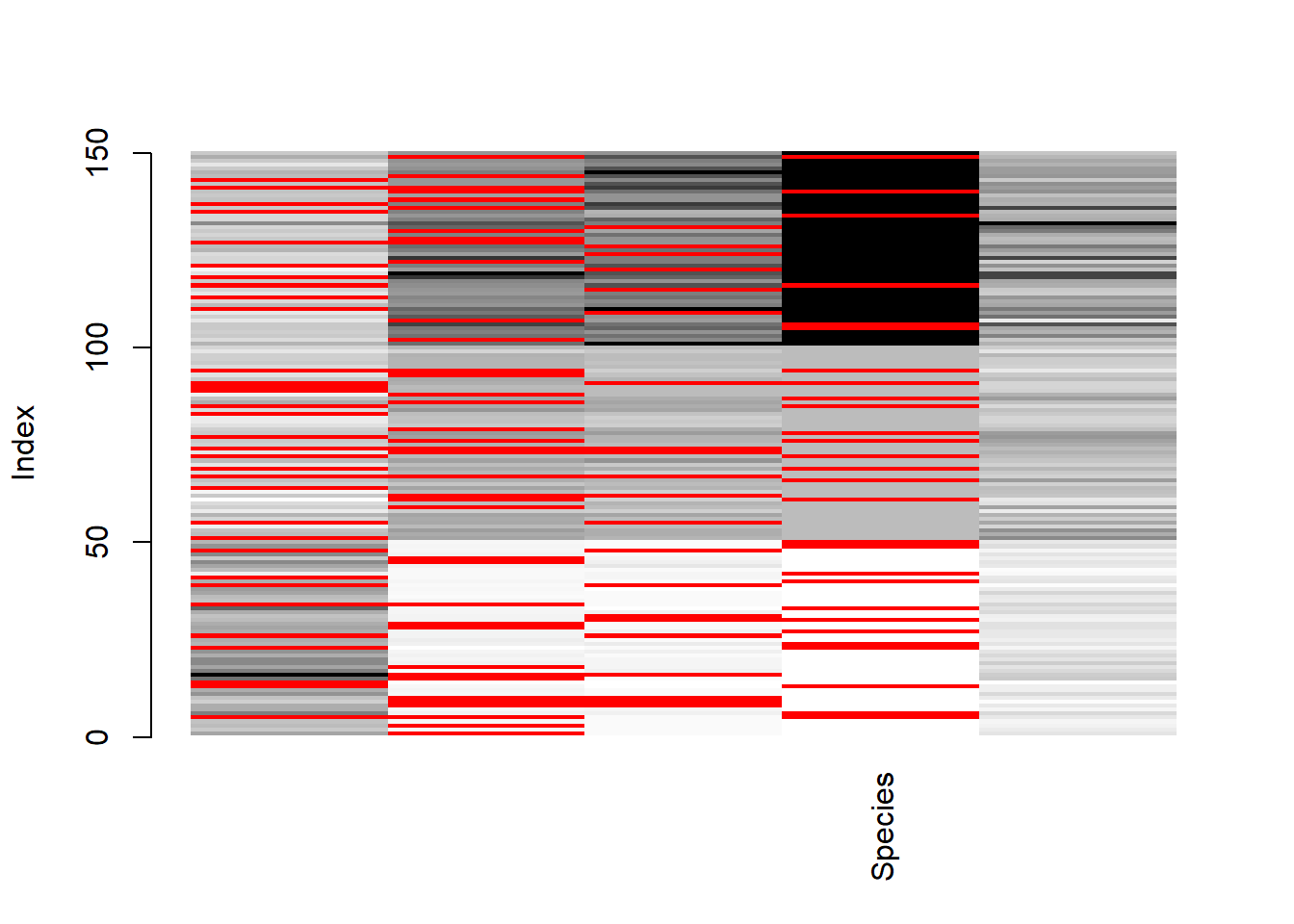

matrixplot() probably more appropriate for a larger dataset.

matrixplot(dat)

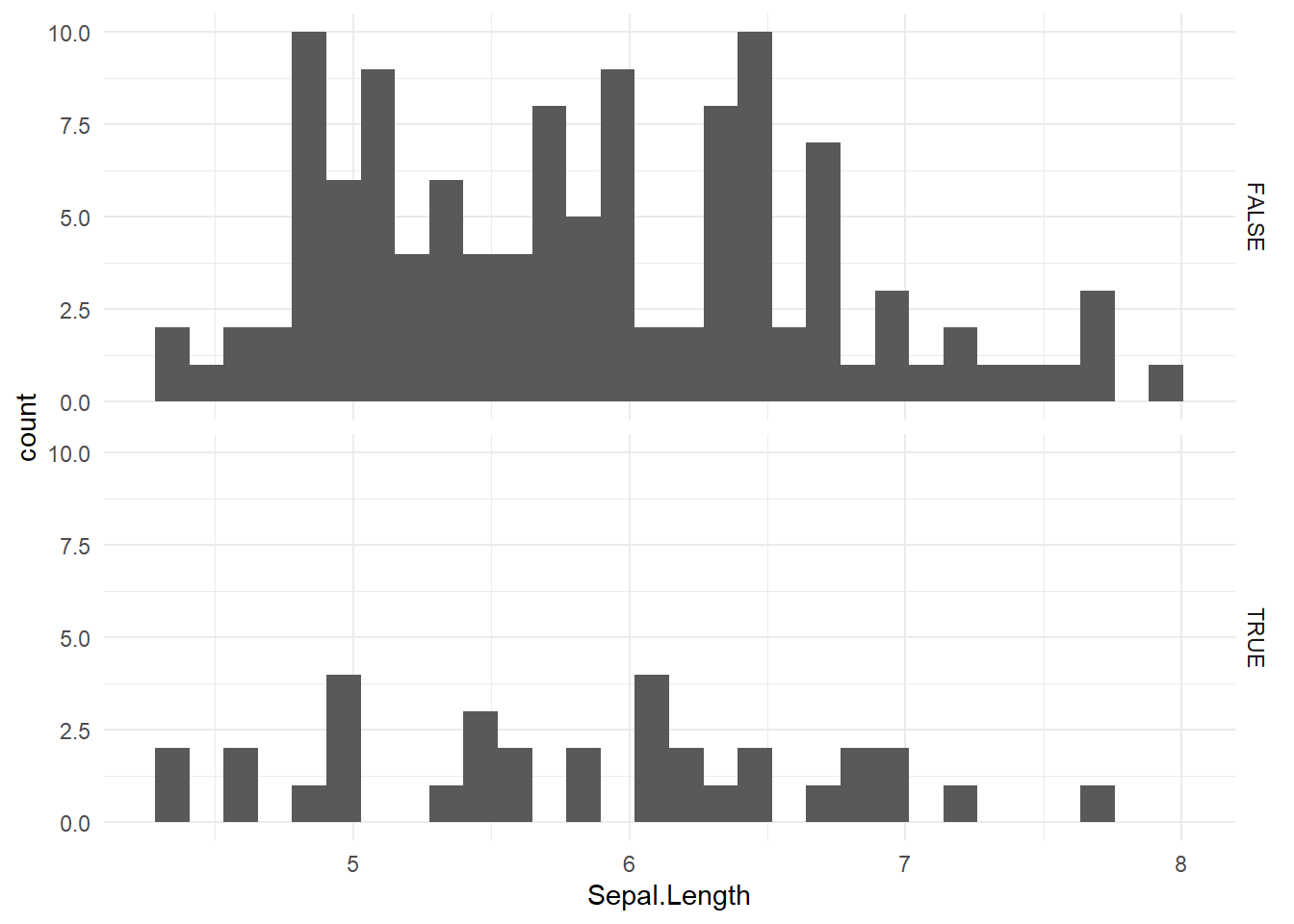

In terms of the missingness pattern, we can also assess the distribution of NAs of Sepal.Width is dependent on the variable Sepal.Length.

niceFunction::histNA_byVar(dat, Sepal.Width, Sepal.Length)

As we can see the distribution and range of the histograms of the NAs (True) and non-NAs (False) is quite similar. Thus, this may indicated that Sepal.Width is at least MAR. However, by right we should do this for each pair of numerical variable before jumping into any conclusion.

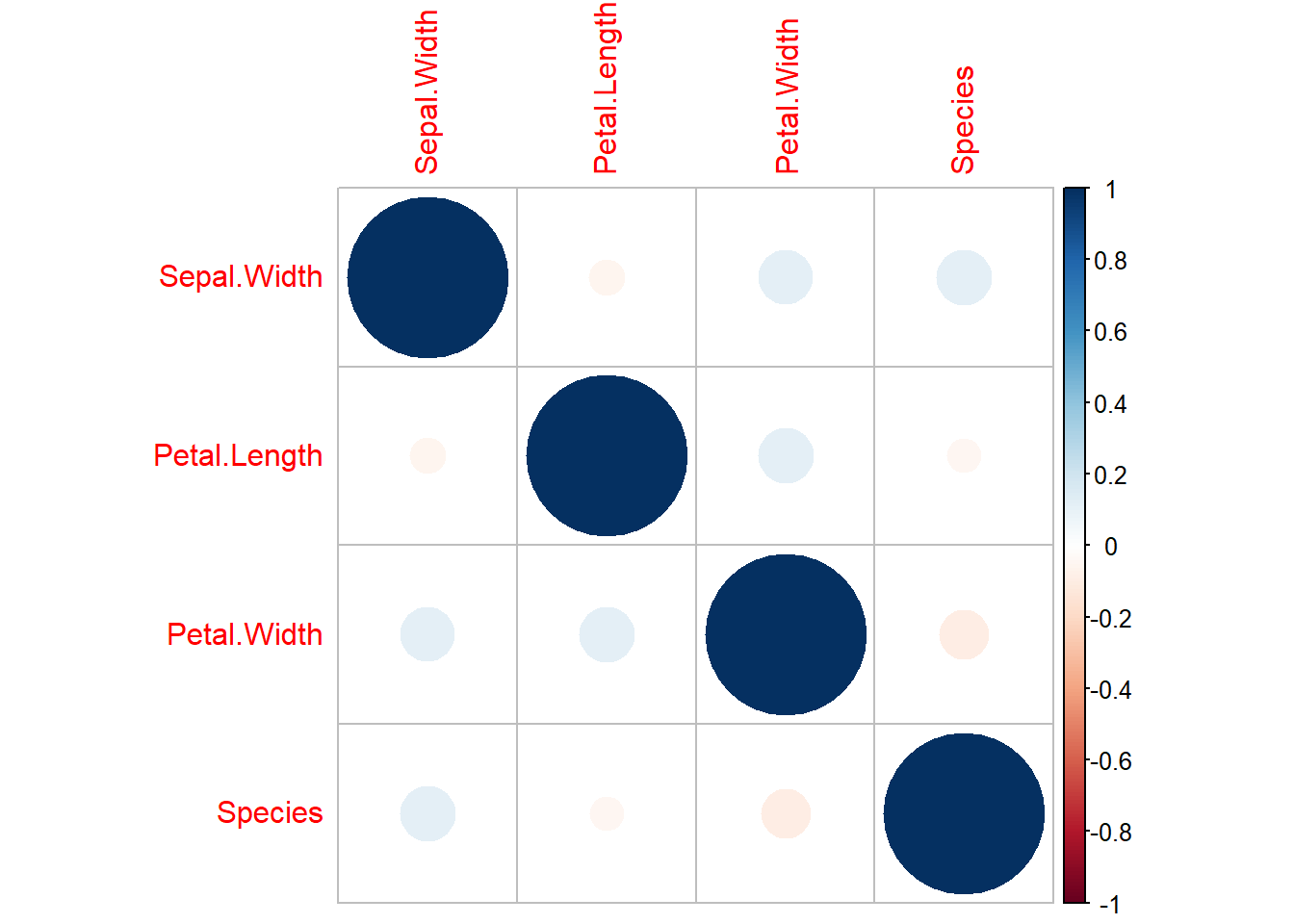

Another good thing to assess is the correlation.

# Data with 1 = NAs, 0 = non-NAs

x <- as.data.frame(abs(is.na(dat))) %>%

dplyr::select(-Sepal.Length) #pick variable with NAs onlyFirstly, the correlation between the variables with missing data.

cor(x) %>%

corrplot::corrplot()

No high correlation among variable with NAs. Secondly, let’s see correlation between NAs in a variable and the observed values of other variables.

cor(dat %>% mutate(Species = as.numeric(Species)), x, use = "pairwise.complete.obs")## Sepal.Width Petal.Length Petal.Width Species

## Sepal.Width NA 0.049158733 -0.065917718 0.09948263

## Petal.Length 0.042075695 NA -0.004572405 -0.17265919

## Petal.Width 0.096195805 -0.003320601 NA -0.11024288

## Species 0.045849046 -0.104143925 -0.081055707 NA

## Sepal.Length -0.006435044 -0.052871701 -0.091024799 -0.08527514Again, there is no high correlation. But, if we were to interpret this correlation matrix; the rows are the observed variables and the columns represent the missingness. For example, missing values of Sepal.Width is more likely to be missing for observations with a high value of Petal.Width (r = 0.05 indicates it’s highly unlikely though).

Now, we can do multiple imputation. These are the methods in the mice package:

methods(mice)## [1] mice.impute.2l.bin mice.impute.2l.lmer mice.impute.2l.norm

## [4] mice.impute.2l.pan mice.impute.2lonly.mean mice.impute.2lonly.norm

## [7] mice.impute.2lonly.pmm mice.impute.cart mice.impute.jomoImpute

## [10] mice.impute.lda mice.impute.logreg mice.impute.logreg.boot

## [13] mice.impute.mean mice.impute.midastouch mice.impute.mnar.logreg

## [16] mice.impute.mnar.norm mice.impute.norm mice.impute.norm.boot

## [19] mice.impute.norm.nob mice.impute.norm.predict mice.impute.panImpute

## [22] mice.impute.passive mice.impute.pmm mice.impute.polr

## [25] mice.impute.polyreg mice.impute.quadratic mice.impute.rf

## [28] mice.impute.ri mice.impute.sample mice.mids

## [31] mice.theme

## see '?methods' for accessing help and source codeBy default, mice uses:

- pmm (predictive mean matching) for numeric data

- logreg (logistic regression imputation) for binary data, factor with 2 levels

- polyreg (polytomous regression imputation) for unordered categorical data (factor > 2 levels)

- polr (proportional odds model) for ordered, > 2 levels

let’s run the mice function to our data:

imp <- mice(dat, m = 5, seed=1234, maxit = 5, printFlag = F)

imp## Class: mids

## Number of multiple imputations: 5

## Imputation methods:

## Sepal.Width Petal.Length Petal.Width Species Sepal.Length

## "pmm" "pmm" "pmm" "polyreg" ""

## PredictorMatrix:

## Sepal.Width Petal.Length Petal.Width Species Sepal.Length

## Sepal.Width 0 1 1 1 1

## Petal.Length 1 0 1 1 1

## Petal.Width 1 1 0 1 1

## Species 1 1 1 0 1

## Sepal.Length 1 1 1 1 0Next, we can do some diagnostic assessment on the imputed data. This is our imputed data.

imp$imp$Sepal.Width %>% head()## 1 2 3 4 5

## 5 3.4 3.4 4.1 3.1 3.5

## 13 3.2 3.1 3.2 3.6 3.1

## 14 3.1 3.2 2.9 3.4 3.0

## 23 3.6 3.2 3.0 3.8 3.1

## 26 4.1 3.0 3.1 3.5 3.0

## 34 3.4 3.7 3.7 3.4 4.4One important thing to check is the convergence. We are going increase the number of iteration for this.

imp_conv <- mice.mids(imp, maxit = 30, printFlag = F)

plot(imp_conv)

The line in the plot should be intermingled and no obvious trend should be observed. Our plot above indicates a convergence.

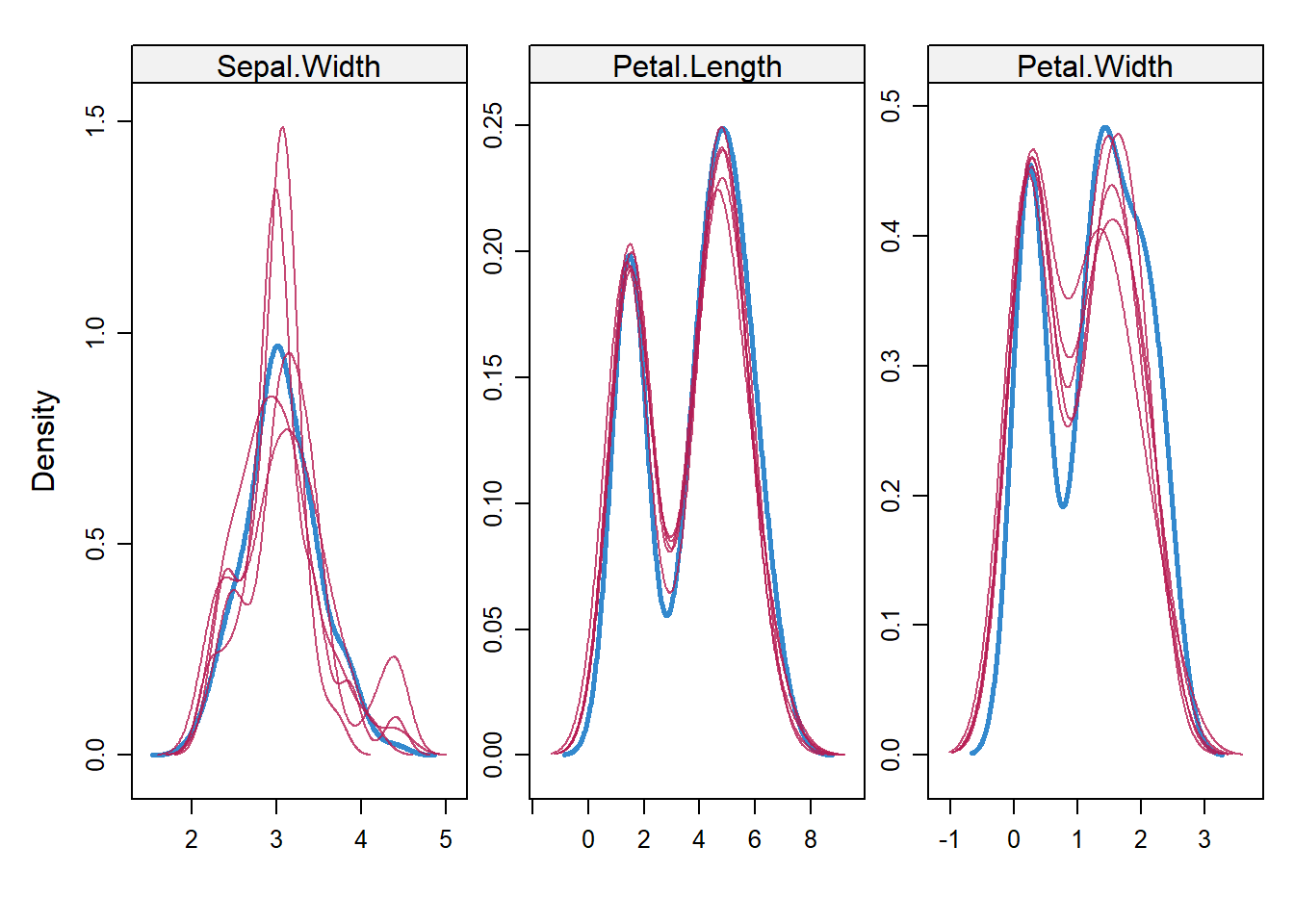

We can also assess density plot of imputed data and the observed data. Blue color is the observed data and red color is the imputed data.

densityplot(imp)

We can further assess variable Sepal.Width.

densityplot(imp, ~ Sepal.Width | .imp)

Lastly, we can assess the strip plot. The imputed observations (red color) should not distributed too far from the observed data (blue color).

stripplot(imp)

So, once we finish the diagnostic checking, we can actually go back and change the imputation method for Sepal.Width, since the its distribution changes quite differently at each iteration. But, we are not going to do that, instead we are going to do the analysis.

# run regression

fit <- with(imp, lm(Sepal.Length ~ Sepal.Width + Petal.Length + Petal.Width + Species))

# pool all imputed set

pooled <- pool(fit)

summary(pooled)## term estimate std.error statistic df p.value

## 1 (Intercept) 2.2008307 0.34577321 6.364954 29.02484 5.859560e-07

## 2 Sepal.Width 0.5233500 0.09717217 5.385801 50.89918 1.854832e-06

## 3 Petal.Length 0.7409159 0.09020153 8.214006 12.73722 1.921415e-06

## 4 Petal.Width -0.3623895 0.18562168 -1.952301 22.34517 6.354332e-02

## 5 Speciesversicolor -0.3891112 0.28166528 -1.381467 15.07547 1.872683e-01

## 6 Speciesvirginica -0.5237106 0.42629920 -1.228505 10.82804 2.452897e-01Since we have the original dataset without the NAs, we going to compare them.

mimpute <-

fit %>%

tbl_regression() #with mice

noimpute <-

dat %>%

lm(Sepal.Length ~ ., data = .) %>%

tbl_regression() #w/o mice

original <-

iris %>%

lm(Sepal.Length ~ ., data = .) %>%

tbl_regression() #original data

tbl_merge(

tbls = list(mimpute, noimpute, original),

tab_spanner = c("With MICE", "Without MICE", "Original data")

)| Characteristic | With MICE | Without MICE | Original data | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Beta | 95% CI1 | p-value | Beta | 95% CI1 | p-value | Beta | 95% CI1 | p-value | |

| Sepal.Width | 0.52 | 0.33, 0.72 | <0.001 | 0.48 | 0.17, 0.79 | 0.003 | 0.50 | 0.33, 0.67 | <0.001 |

| Petal.Length | 0.74 | 0.55, 0.94 | <0.001 | 0.71 | 0.51, 0.90 | <0.001 | 0.83 | 0.69, 1.0 | <0.001 |

| Petal.Width | -0.36 | -0.75, 0.02 | 0.064 | -0.35 | -0.85, 0.14 | 0.2 | -0.32 | -0.61, -0.02 | 0.039 |

| Species | |||||||||

| setosa | — | — | — | — | — | — | |||

| versicolor | -0.39 | -1.0, 0.21 | 0.2 | -0.42 | -1.1, 0.30 | 0.3 | -0.72 | -1.2, -0.25 | 0.003 |

| virginica | -0.52 | -1.5, 0.42 | 0.2 | -0.42 | -1.5, 0.63 | 0.4 | -1.0 | -1.7, -0.36 | 0.003 |

|

1

CI = Confidence Interval

|

|||||||||

There is a different in the result between the original dataset (no NAs) and with mice imputation. Probably, exploring other imputation methods will produce a better result.

There are a lot more that are not cover in this post. For example passive imputation and post-processing. In fact, there are a series of vignettes written by Gerko Vink and Stef van Buuren (both are the authors of mice) which provides a good tutorial on using mice though quite advanced.

Suggested online books (though, I have not really studied both of the books yet):

- Flexible imputation of missing data by Stef van Buuren

- Applied missing data analysis with SPSS and (R)Studio

References for this post:

- R in Action, Data analysis and graphics with R (Chapter 15)

- https://data.library.virginia.edu/getting-started-with-multiple-imputation-in-r/

- https://stats.idre.ucla.edu/r/faq/how-do-i-perform-multiple-imputation-using-predictive-mean-matching-in-r/

- mice: Multivariate Imputation by Chained Equations in R